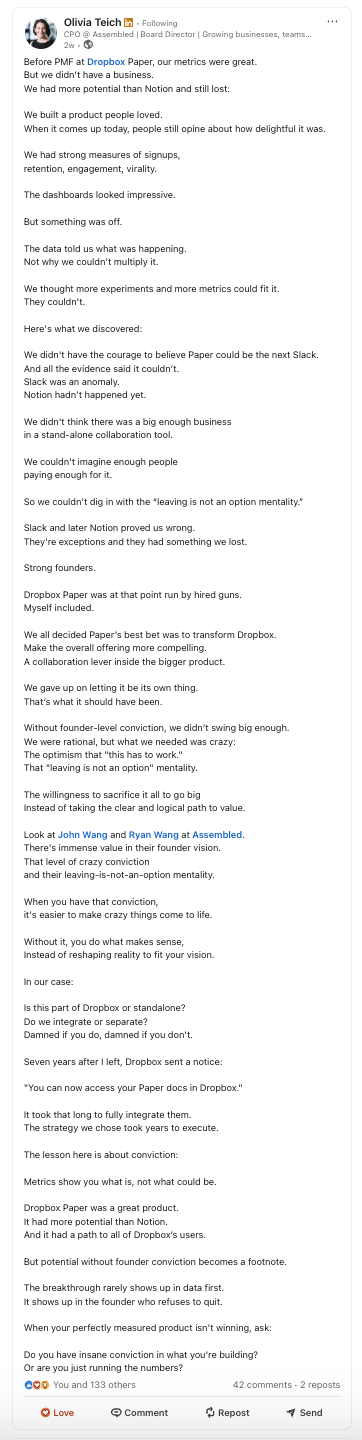

I came across this post by Olivia Teich about Dropbox Paper and it hit home. Olivia led Product for all collaborative products at Dropbox for a while and her reflection on Paper's failure stung because I lived through it.

Y’all, when I tell you Paper was Notion before there was Notion. Smh.

It was clean, thoughtfully designed, and cupcake (iykyk). I genuinely loved using it. (Who loves using software? 🙃

But while the product dashboards might have "looked impressive," the quality dashboards most definitely did not. Even when I joined Dropbox in 2016, at the height of investment in Paper, our quality metrics told a different story than the product metrics. And over time, the experience became less and less delightful, and Paper died slowly.

It reminded me of something written in 2024 but that I only came across recently, about how Google Search lost its soul. It happened by design (because they were optimizing for growth), and also unintentionally (because… who does that?).

Ed Zitron's piece, The Man Who Killed Google Search, details how Google deliberately optimized for the numbers that "mattered" while product quality and user trust seemingly became acceptable trade-offs.

This isn't new. Ask any QA person who's pushed for better product quality and gotten pushback because the tests were green, SLAs were met, velocity was high. You get the idea.

But poor product quality still bugs users, even when your metrics tell you that things are fine. And death by a thousand paper cuts is still death.

The Canary in the Coal Mine

In my interview with Ministry of Testing, I brought up an analogy that I like to use. If product development is a car, then QA used to be the brakes. We were good at stopping the car before the accident happened. Now we're looked at as the entire safety system: brakes, gear shifts, backup cameras, collision detection…

But maybe what we really need to be are the canaries in the coal mine. (I know, new metaphor.)

Quality folks see things breaking before anyone else. We notice when the product starts feeling *off*. But if our measurement systems (or the environments we’re working in) only reward green dashboards, then we can fall into that same trap of optimizing for metrics that make everyone feel good while the product slowly degrades.

Signs You're Optimizing for the Wrong Things

There are some indicators I’ve learned that signal you're optimizing for the wrong things:

Your metrics tell you about volume

Test count is up but coverage of critical paths is low (or unknown, more likely 🤔). Or bug triage is high but bug backlogs are constantly growing and your resolution rate is in the toilet.

Quality conversations happen in silos

When was the last time someone asked about quality in a roadmap meeting or a product review?

Your best insights come from production

If you’re regularly surprised by post-launch discoveries, your quality strategy is reactive.

Green dashboards exist alongside frustrated users

Are you setting your metric targets for what’s achievable with minimal effort, or for what’s actually good according to your users (and presumably, the people who pay your company money)?

What To Do Instead

Instead of measuring what's easy to count and easy to hit, measure what actually matters to your users.

Pair every metric with a story. What does this number mean? What's not showing up here? What would change our behavior if this metric moved?

Track risk. Bug severity trends. Regression patterns. Coverage gaps in areas that actually matter to users.

Make space for qualitative insights. It’s easy when something is obviously broken. But what about when something feels shaky or makes you nervous. What would you never recommend to a friend.

Push upstream. Those quality metrics should be influencing roadmaps or something. What’s your something?

Stop reporting success when the product isn't great. This is the hardest one. It means having uncomfortable conversations about why the dashboards look good but users are unhappy.

The Real Work

QA exists to make the product good. (And sustainable, and trustworthy, and sane to ship and support 💁♀️). That means choosing product integrity over performance theater, even when it's awkward.

Especially when it's awkward.

The breakthrough rarely shows up in data first. It shows up in the person who refuses to accept that "the metrics look fine" is a sufficient answer when the product feels wrong.

Your quality metrics should tell you whether you're building something worth building. If they can't do that, they're measuring compliance. And compliance never built a product anyone loved.